You know what is funny? That each of the NN hyperparameters mentioned above can be critical. Not enough hyperparameters? You need to set a batch size as well (batch: how many samples to show for each weights update). Let's go with simple SGD: You need to set learning rate, momentum, decay. You can start with simple Stochastic Gradient Descent (SGD), but there are many others. Then you need to choose a training algorithm. How many neurons to use in each layer? What activation functions to use? What weights initialization to use?Īrchitecture ready. How many layers to use usually 2 or 3 layers should be enough. You need some magic skills to train NN well. For NN you have more steps for preprocessing, so more steps to implement in the production system as well.įor RF, you set the number of trees in the ensemble (which is quite easy because of the more trees in RF the better) and you can use default hyperparameters and it should work. Keep in mind that all preprocessing that is used for preparing training data should be used in production. Scale features into the same (or at least similar) range.Convert categorical data into numerical.To conclude, for NN training, you need to do the following preprocessing:

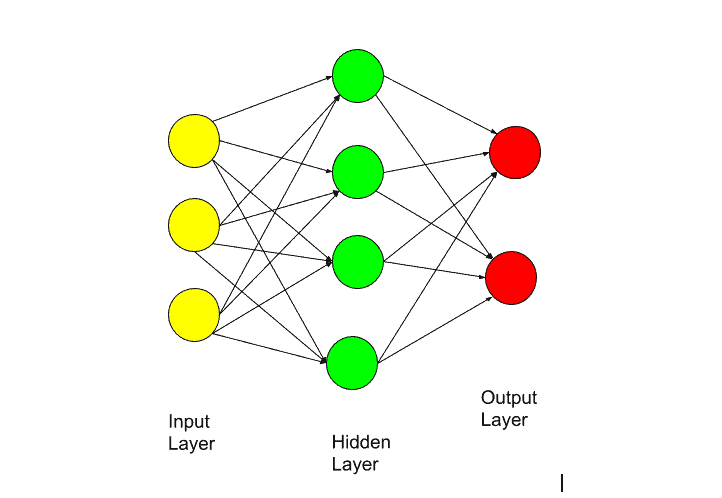

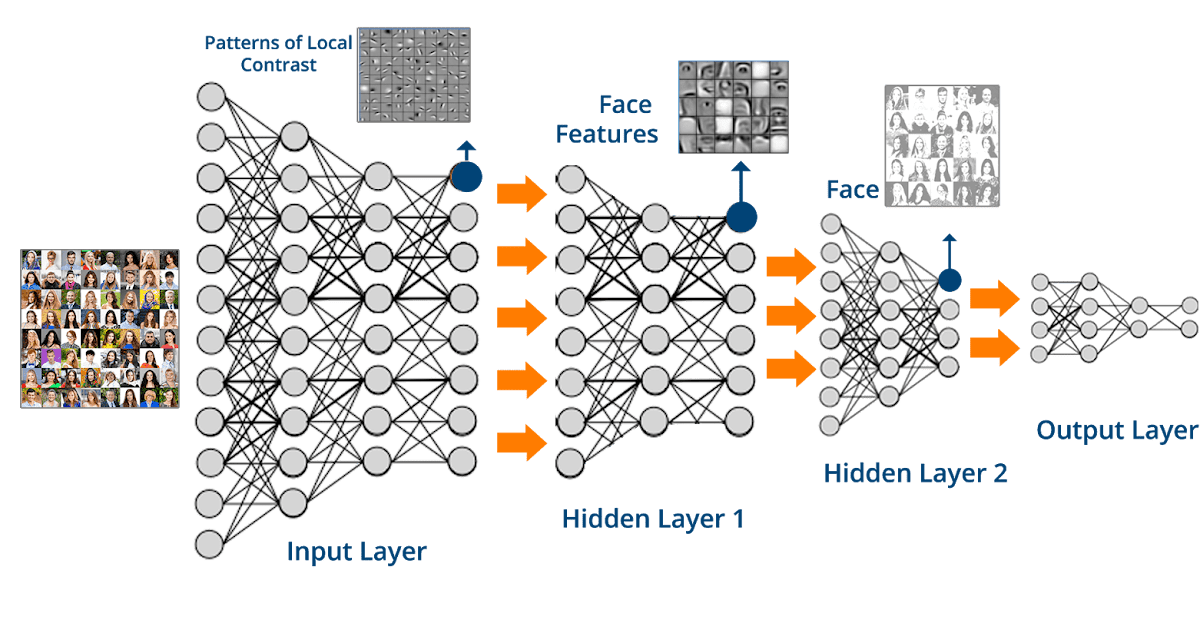

What is more, the gradients values can explode and the neurons can saturate, which will make it impossible to train NN. If you don't scale features into the same ranges then features with larger values will be treated as more important in the training, which is not desired. In the case of different ranges of features, there will be problems with model training. What is more, there is a need for feature scaling. Categorical data has been converted into numericalĭata preprocessing for NN requires filling missing values and converting categorical data into numerical.To prepare data for RF (in Python and Sklearn package), you need to make sure that: However, the Sklearn implementation doesn't handle this. In theory, the RF should work with missing and categorical data. However, I would prefer RF over NN because they are easier to use. In the case of tabular data, you should check both algorithms and select the better one. OK, so now you have some intuition that, when you deal with images, audio, or text data, you should select NN. In theory, RF can work with such data as well, but, in real-life applications, after such preprocessing, data will become sparse and RF will be stuck. Text data-can be handled by NN after preprocessing, for example with bag-of-words.On the other hand, NN can work with many different data types: (What is tabular data? It is data in a table format). The last layer of neurons is making decisions. Usually, they are grouped in layers and process data in each layer and pass forward to the next layers. The neurons cannot operate without other neurons they are connected. The NN is a network of connected neurons. Decision trees in the ensemble are independent. Each decision tree, in the ensemble, processes the sample and predicts the output label (in case of classification). The RF is the ensemble of decision trees. I will try to show you when it is good to use Random Forest and when to use Neural Network.įirst of all, Random Forest (RF) and Neural Network (NN) are different types of algorithms. 2.2.Which is better, Random Forest or Neural Network? This is a common question, with a very easy answer: It depends. Today, deep learning is one of the most visible areas of machine learning because of its success in areas like Computer Vision, Natural Language Processing, and when applied to reinforcement learning, scenarios like game playing, decision making and simulation. An example is a device with a Graphics Processing Unit (GPU). Deep learning is an umbrella term for machine learning techniques that make use of 'deep' neural networks. They would also typically require more powerful computing units than the average computer. Lastly, due to their simplicity, MLPs will usually require short training times to learn the representations in data and produce an output. This improves the performance of the network while reducing the errors in the output. In simplified terms, backpropagation is a way of fine-tuning the weights in a neural network by propagating the error from the output back into the network. The result is the output from the computations applied to the data through the network.Īnother characteristic of MLPs is found in backpropagation, a supervised learning technique for training a neural network. There is no restriction on the number of hidden layers, however, an MLP usually has a small number of hidden layers.įinally, the last layer, the output layer is responsible for producing results. This processing is in the form of computations. Secondly, we have the hidden layer, which processes the information received from the input layer. The input layer is the initial layer of the network, taking input in the form of numbers. Machine learning is a subset of AI, and it consists of the techniques that enable computers to figure things out from the data and deliver AI applications.

0 kommentar(er)

0 kommentar(er)